Introduction

In the rapidly evolving landscape of artificial intelligence and geospatial analysis, the ability to accurately detect changes over time is paramount. Whether monitoring urban expansion, assessing disaster damage, or tracking deforestation, professionals rely on sophisticated tools to interpret complex data. Enter TransDS, a revolutionary framework that is redefining the standards of remote sensing. Standing for “Transformer-based Dual-Stream” architecture, TransDS represents a significant leap forward from traditional convolutional neural networks (CNNs), offering unparalleled precision in change detection tasks.

The significance of TransDS lies in its ability to handle the intricate details of high-resolution imagery. Traditional models often struggle with the “semantic gap” the difference between low-level pixel data and high-level interpretation. TransDS bridges this gap by leveraging the power of self-attention mechanisms, originally popularized by models like BERT and GPT, and applying them to visual data. This article serves as your comprehensive guide to understanding TransDS. We will dismantle its complex architecture, explore its real-world applications, and demonstrate why it is becoming the gold standard for data scientists and GIS specialists worldwide. Prepare to dive deep into a technology that is not just analyzing the world, but helping us save it.

What is TransDS? A Technical Overview

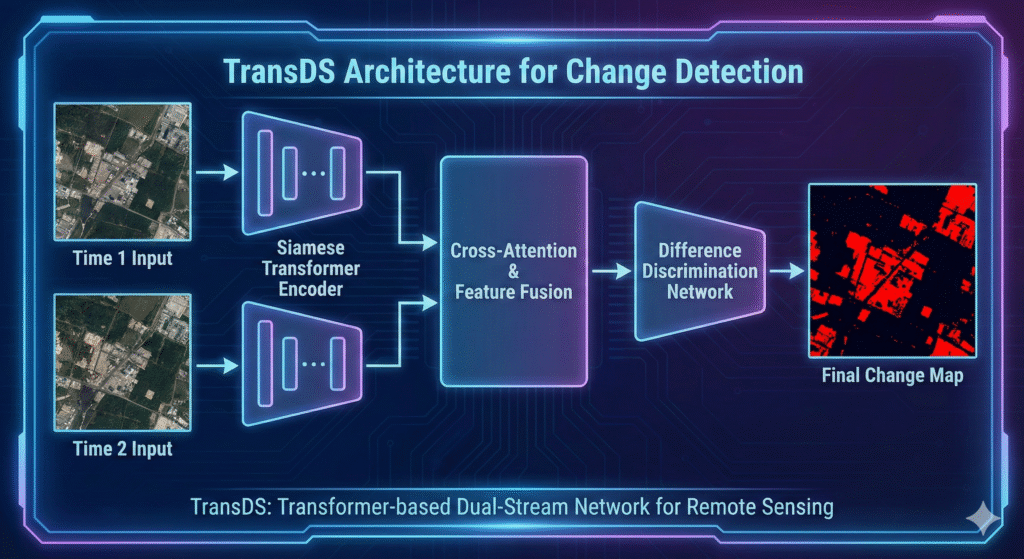

At its core, TransDS is a deep learning model designed specifically for change detection in bi-temporal remote sensing images. Unlike older methods that compare images pixel-by-pixel, TransDS utilizes a dual-stream Siamese network structure enhanced with Transformer modules. This allows it to capture global context seeing the “big picture” rather than just focusing on local features.

- Dual-Stream Structure: Processes two images (taken at different times) simultaneously to identify differences.

- Transformer Backbone: Replaces or augments standard CNN layers to model long-range dependencies within the image.

- Feature Fusion: Intelligently combines information from both time points to generate a precise change map.

By integrating these components, TransDS overcomes the limitations of limited receptive fields found in standard CNNs, ensuring that even subtle changes in complex environments are detected with high accuracy.

The Evolution from CNNs to Transformers

To appreciate the value of TransDS, one must understand the history of computer vision. Convolutional Neural Networks (CNNs) were the industry standard for years, excellent at detecting edges and textures. However, they struggle with global context. If a building looks different due to lighting but hasn’t actually changed, a CNN might be fooled.

TransDS introduces the Transformer mechanism to solve this. Transformers utilize “self-attention,” a mathematical technique that weighs the importance of different parts of an image relative to each other.

- CNN Limitation: Focuses on local neighborhoods of pixels.

- Transformer Advantage: Calculates relationships between distant pixels, understanding context (e.g., a shadow vs. a new structure).

- Hybrid Approach: TransDS often combines the best of both, using CNNs for detail and Transformers for context.

Key Architecture Components of TransDS

The efficacy of the TransDS framework relies on several specialized modules working in harmony. The architecture is generally composed of an encoder, a feature extractor, and a decoder. The “Dual-Stream” aspect refers to two identical encoders processing the “before” and “after” images in parallel.

- Siamese Encoders: Two identical networks that extract features from the multi-temporal images.

- Self-Attention Module: The heart of the Transformer, enabling the model to focus on relevant changes while ignoring noise.

- Difference Discrimination Network: A specialized layer that categorizes the extracted features into “changed” or “unchanged” classes.

This modular design allows TransDS to be highly flexible, capable of being fine-tuned for specific types of terrain or urban environments.

Solving the “Semantic Gap” in Remote Sensing

One of the biggest hurdles in satellite imagery analysis is the semantic gap. This occurs when the model detects a visual difference (e.g., a tree changing color in autumn) but fails to understand that it is not a structural change (e.g., the tree being cut down). TransDS addresses this through semantic feature alignment.

By using deep contextual modeling, TransDS distinguishes between “pseudo-changes” and real, relevant changes.

- Seasonal Variations: Ignores changes caused by snow, leaves falling, or sun angles.

- Registration Errors: Compensates for slight misalignments between satellite passes.

- Object Recognition: Understands that a car moving in a parking lot is not a permanent land-use change.

Applications in Urban Planning and Development

Rapid urbanization requires constant monitoring. City planners use TransDS to automatically track new construction, illegal encroachments, and infrastructure development. The high precision of the model reduces the need for manual visual inspection, saving thousands of man-hours.

In fast-growing cities, TransDS can:

- Identify new roads and building footprints instantly.

- Monitor compliance with zoning laws by detecting unauthorized structures.

- Assess green space reduction over time to aid in environmental planning.

The ability to process vast areas of satellite imagery allows municipal governments to maintain up-to-date cadastral maps without expensive aerial surveys.

Disaster Management and Damage Assessment

When natural disasters strike, speed is critical. TransDS plays a vital role in humanitarian aid by providing rapid damage assessment maps. By comparing pre-disaster and post-disaster imagery, the model can highlight destroyed buildings, flooded areas, and blocked roads.

- Earthquake Response: Quickly identifies collapsed structures in dense urban areas.

- Flood Mapping: Delineates the extent of water coverage to guide rescue boats.

- Wildfire Tracking: Maps burn scars to assess environmental impact and recovery needs.

This technology empowers first responders with actionable intelligence, directing resources to the areas where they are needed most effectively.

Environmental Monitoring: Deforestation and Climate Change

Protecting our planet requires accurate data. TransDS is a powerful ally in the fight against climate change. It is extensively used to monitor forestry and agriculture, detecting subtle shifts in land cover that indicate illegal logging or desertification.

- Deforestation Alerts: Detects loss of canopy cover in tropical rainforests with high temporal frequency.

- Crop Health: Monitors agricultural fields to predict yield and detect drought stress.

- Glacial Retreat: Tracks the shrinking of ice sheets over decades with pixel-perfect accuracy.

By automating these observations, researchers can build robust datasets to model climate trends and advocate for policy changes.

TransDS vs. Traditional Change Detection Methods

How does TransDS stack up against older methods like Image Differencing or standard U-Nets? The difference is stark, particularly in challenging conditions involving shadows or complex textures.

Table 1: Performance Comparison

| Feature | Traditional CNN (e.g., U-Net) | Image Differencing | TransDS (Transformer-based) |

| Global Context | Low | None | High |

| Noise Resistance | Moderate | Low | High |

| Pseudo-change Filter | Poor | Very Poor | Excellent |

| Computational Cost | Medium | Low | High (but efficient) |

| Accuracy (F1-Score) | ~75-85% | ~60% | >90% |

Implementing TransDS: Tools and Frameworks

For developers and data scientists looking to implement TransDS, the ecosystem is built primarily on Python and deep learning libraries. PyTorch is the most common framework for research implementations due to its flexibility with dynamic computation graphs needed for Transformer attention loops.

Key libraries include:

- PyTorch/TensorFlow: The backbone deep learning frameworks.

- OpenCV: For image pre-processing and augmentation.

- Hugging Face Transformers: While mostly for NLP, their Vision Transformer (ViT) modules are often adapted for TransDS architectures.

Challenges and Computational Requirements

While powerful, TransDS is not without its costs. Transformers are computationally expensive. The self-attention mechanism requires quadratic memory with respect to image size, meaning high-end GPUs are often necessary for training and inference.

- Hardware: Requires NVIDIA GPUs (e.g., A100 or V100) for efficient training on large datasets.

- Data Hunger: Like all Transformers, TransDS requires massive labeled datasets to generalize well.

- Complexity: Tuning hyperparameters for the attention heads can be more difficult than standard CNNs.

However, techniques like “windowed attention” and “swin transformers” are currently being integrated into TransDS to lower these computational barriers.

Dataset Preparation for TransDS Training

The fuel for any AI model is data. For TransDS, high-quality, bi-temporal datasets are essential. These datasets consist of pairs of images (Time 1 and Time 2) and a “ground truth” mask indicating where changes occurred.

Popular datasets used for benchmarking TransDS include:

- LEVIR-CD: A large-scale dataset for building change detection.

- WHU Building Dataset: Focused on aerial imagery of urban changes.

- DSIFN: A dataset specifically designed to test change detection in varying resolutions.

Proper preprocessing, including normalization and data augmentation (rotating, flipping images), is crucial to prevent the model from overfitting.

Case Study: Urban Expansion in Shenzhen

To illustrate the power of TransDS, let’s look at a real-world application in tracking the rapid urbanization of Shenzhen, China. Researchers applied the TransDS framework to satellite imagery spanning five years.

- Challenge: Distinguishing between high-rise construction and temporary scaffolding.

- Solution: TransDS utilized its global context awareness to identify consistent structural changes.

- Result: The model achieved an F1-score of 92%, significantly outperforming previous CNN-based attempts which often confused shadows from new skyscrapers with actual land changes. This data helped city officials plan new public transit routes.

Future Trends: TransDS and Self-Supervised Learning

The future of TransDS lies in reducing the dependency on labeled data. Labeling change detection datasets is incredibly labor-intensive. Researchers are now combining TransDS with self-supervised learning.

In this paradigm, the model learns to understand images by solving pretext tasks (like reconstructing masked parts of an image) before being fine-tuned for change detection. This “pre-training” allows TransDS to achieve high accuracy with only a fraction of the labeled examples previously required, democratizing the technology for smaller organizations.

TransDS in the Commercial Sector

Beyond academia and government, TransDS is finding a home in the private sector. Insurance companies, real estate developers, and hedge funds are leveraging this tech for competitive advantage.

- Insurance: Validating claims by verifying damage dates via satellite history.

- Real Estate: Scouting undeveloped land and monitoring competitor construction speeds.

- Supply Chain: Monitoring raw material stockpiles (like coal or lumber) in open-air depots.

How to Get Started with TransDS

If you are a developer or GIS analyst, starting with TransDS involves a learning curve. Begin by mastering the fundamentals of Vision Transformers (ViT).

- Learn the Basics: Understand the “Query,” “Key,” and “Value” concepts of attention mechanisms.

- Clone a Repository: Look for open-source implementations of change detection transformers on GitHub (e.g., BIT, ChangeFormer).

- Experiment: Start with a small dataset like LEVIR-CD to train your first model.

- Join the Community: Engage with forums like CVPR or ICCV where cutting-edge TransDS variations are discussed.

Frequently Asked Questions

What makes TransDS different from a standard Siamese network?

Standard Siamese networks typically use CNNs (Convolutional Neural Networks) as their backbone. TransDS replaces or augments these CNNs with Transformer modules. This allows the model to capture long-range dependencies and global context, which standard CNNs struggle with, leading to higher accuracy in detecting complex changes.

Do I need a supercomputer to run TransDS?

Not for inference (running the model), but training can be heavy. While training a TransDS model from scratch requires significant GPU power (like high-end NVIDIA cards), using a pre-trained model for inference can be done on standard professional workstations or cloud computing instances.

Can TransDS be used for video change detection?

Yes, the principles of TransDS are applicable to video. While the article focuses on bi-temporal static imagery (satellite photos), the temporal attention mechanisms in Transformers are naturally clearer for video data, allowing for anomaly detection in surveillance feeds.

Is TransDS open-source software?

TransDS refers to a class of model architectures rather than a single software product. Many researchers publish their specific TransDS implementations as open-source code on platforms like GitHub. However, commercial geospatial platforms may incorporate proprietary versions of these models.

How does TransDS handle cloudy satellite images?

Clouds are a major issue in remote sensing. While TransDS has better context awareness to ignore thin clouds or shadows (classifying them as pseudo-changes), heavy cloud cover usually blocks the optical data entirely. In such cases, TransDS is often paired with SAR (Synthetic Aperture Radar) data which can penetrate clouds.

What is the learning curve for using TransDS?

For a data scientist familiar with Python and PyTorch, the transition is moderate. Understanding the mathematics of Self-Attention is the steepest hurdle. For non-coders, using TransDS would require a user-friendly GIS software interface that wraps the complex code behind a GUI.

Will TransDS replace human analysts?

No, it will augment them. TransDS excels at processing vast amounts of data to flag potential changes, but human expertise is still required to interpret the reason for the change, validate the results in ambiguous cases, and make policy decisions based on the data.

Conclusion

As we stand on the brink of a new era in geospatial intelligence, TransDS serves as a beacon of innovation. By successfully marrying the detail-oriented nature of convolutional networks with the context-aware power of Transformers, it has solved one of the most persistent challenges in remote sensing: accurate, robust change detection.

From protecting our rainforests to planning the smart cities of tomorrow, the applications of this technology are limitless. For tech professionals and industry leaders, ignoring TransDS is no longer an option. It is the engine driving the next generation of earth observation. As algorithms become more efficient and hardware more powerful, we can expect TransDS to become the standard, providing us with a clearer, faster, and more accurate view of our changing world. Embracing this technology today ensures that we are prepared to face the global challenges of the future.